Sovereign Acoustic Inference: Real Sub-1s Latency.

Deploy speech-to-speech translation infrastructure with full data control. No network latency. No third-party dependencies. Industrial-grade performance 100% On-Premise.

The Next-Generation Acoustic Intelligence Engine

Liora Flow is not just translation software; it is an ultra-low latency infrastructure designed to process human language at the speed of thought. We have eliminated cloud dependency to deliver absolute sovereignty, total privacy, and performance that defies the current market.

Performance at Scale

E2E Latency

Full pipeline across 7 simultaneous languages. Bidirectional conversational flow that breaks the perceptible human response barrier.

OPEX Reduction

Eliminate variable Azure/Google Cloud invoices. Full sovereignty with marginal operational cost.

RTX-Optimized Architecture

Parallel inference across 7 simultaneous languages on consumer-grade commercial hardware. Data center power on your local network.

Massive Ingestion Architecture

The Proprietary Orchestrator: Efficiency Without Compromise

Our proprietary engine redefines scalability. While other systems collapse under audio processing load, Liora Flow remains unshakable.

World-Class VAD

Our Voice Activity Detector (VAD) processes each audio frame in just 1.3µs. Performance that enables up to 13,000 concurrent users on a single commercial CPU node.

Selective Neural Filter

Before consuming GPU resources, a neural classifier validates the signal in 1.9ms. If it's not useful human speech, the system wastes no energy. Pure efficiency.

Zero Buffer Lag

With buffer delay under 5ms, communication flows without the interruptions typical of services based on external REST APIs.

The Brain: Real-Time Inference

Extreme Performance on RTX 5070 Ti

We have optimized the inference pipeline to extract every teraflop of power from the hardware. Liora Flow breaks the one-second barrier, delivering instant multilingual results.

| Process | Latency (P50) | Throughput |

|---|---|---|

Speech Detection (STT) | 157ms | Surgical audio processing. |

Translation (7 Languages) | 186ms | Concurrent parallel inference. |

Voice Synthesis (TTS) | 80ms | Immediate natural audio generation. |

Full Pipeline | 812ms | Sub-1s across 7 simultaneous languages. |

Core API: The infrastructure of the future, today.

We are profiling our cluster deployment to deliver Liora's power through an elastic consumption API. Integrate the fastest acoustic engine on the market into your own servers.

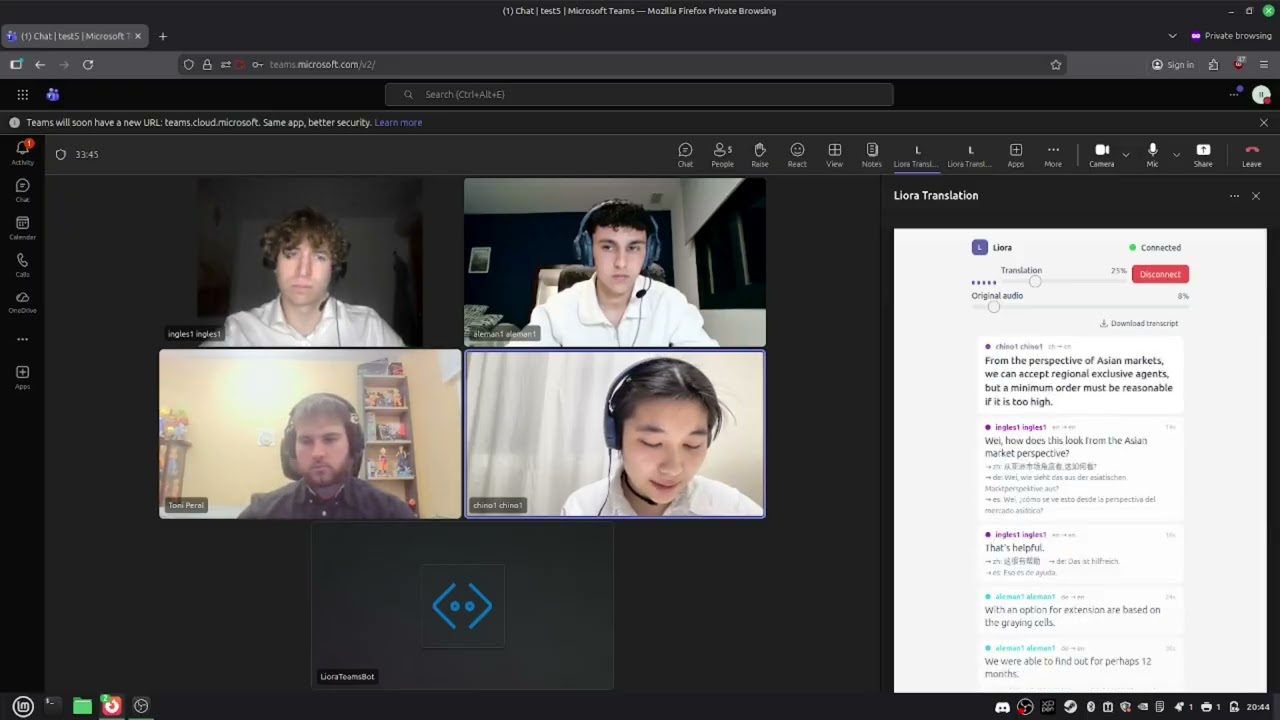

Join the waitlistTechnical Integration: Live Multilingual Teams Call

Real-time translation across English, Spanish, German, and Chinese within Microsoft Teams.

Select demo language:

From theory to extreme reverberation.

We deployed Liora Flow at the Cathedral of Santiago de Compostela, one of the most hostile acoustic environments in the world.

Extreme Acoustics

Thousand-year-old stone with echoes exceeding 3 seconds. Reverberation that would destroy any other speech recognition system.

Critical Sessions

Over 5 hours of continuous processing without precision degradation.

Data Sovereignty

100% local processing. Data never leaves the premises, guaranteeing absolute privacy under any legal framework.

Industrial-Grade Security & Resilience

Designed to never fail. Intelligent Circuit Breakers.

Memory Protection

Active monitoring that prevents RAM saturation. Operational limit at 70% to ensure continuous stability.

CPU Load Management

Dynamic load balancing across threads with automatic optimization to keep the system always responsive.

Hardware Isolation

Efficient VRAM usage with 29% reserve at peak load to guarantee stability during unexpected traffic bursts.

Deployment Flexibility: Liora Flow Your Way

Not all organizations face the same acoustic or privacy challenges. That's why we've structured three access models for our technology, always guaranteeing the Sub-1s performance that defines us.

Liora Cloud API

Developers, Startups and SaaS applications.

Integrate the fastest translation engine on the market in minutes. Our infrastructure manages the hardware so you can focus on user experience.

Model: Pay per use (minutes processed).

Advantage: Instant elastic scalability with no upfront hardware investment.

Latency: Optimized through high-priority bursts.

On-Premise License

Large corporations, Legal, Healthcare and Government.

Total data control. We install Liora Flow directly on your local infrastructure or private cloud via optimized Docker containers.

Model: Annual recurring license (SaaS On-Prem).

Advantage: Absolute sovereignty. Data never leaves your local network (LAN). Native GDPR compliance.

Requirement: Servers with NVIDIA GPU (RTX Series or Data Center).

Hardware Appliance (Liora Box)

Live events, Conference centers and Mobile units.

The turnkey solution. We deliver a pre-configured physical server specifically optimized for the Liora Flow engine.

Model: Hardware sale + Software license.

Advantage: Plug & Play. Connect audio and start processing. No configurations or dependencies.

Hardware: Optimized workstations with RTX 5070 / 5080 GPUs.

Technical FAQ

This section addresses the integration, security and performance challenges of Liora Flow.

Start Your Project with Liora Flow

Tell us about your technical challenge. Our engineering team will analyze your use case to propose the most efficient inference architecture.

Built for Infrastructure

Powered by a proprietary orchestrator engineered for ultra-low latency and massive concurrency. Every component runs on-premise — from speech recognition to neural translation to voice synthesis.

Native Orchestrator

Custom-built runtime for real-time audio pipeline management with sub-millisecond scheduling.

100% On-Premise

Zero external API calls. All models run locally on your infrastructure.

Massive Concurrency

Handle hundreds of simultaneous audio streams with a native concurrency architecture.